This demonstration will also include an example which seeks to illustrate the k-nearest neighbor method of analysis. Both methodologies were previously demonstrated within the SPSS platform.

If you are un-familiar with this particular methodology, or if you wish to re-familiarize yourself, please consult the following articles: Dimension Reduction (SPSS) and Nearest Neighbor / Dimension Reduction (Pt. II) (SPSS).

Example:

For this demonstration, we will be utilizing the same data set which was previously utilized to demonstrate the analytical process within SPSS. This data set can be found within this site’s GitHub Repository.

# Be sure to change the ‘filepathway’ so that it matches the file location on your #

# computer #

DimensionReduct <- read.table("C:\\filepathway\\DimensionReduction.csv", fill = TRUE, header = TRUE, sep = "," )

# First, we must remove the ‘ID’ column from the data frame #

DimensionReduct <- DimensionReduct[c(-1)]

# Next we will perform a bit of preliminary analysis with the following code: #

# The function option: ‘scale. = TRUE.’ requests scaling prior to analysis. If the data #

# frame has already been scaled, indicate ‘False’ as it pertains to this option. #

pca_existing <- prcomp(DimensionReduct, scale. = TRUE)

# Summary output can be induced from the following functions: #

summary(pca_existing)

plot(pca_existing)

Console Output:

Importance of components:

PC1 PC2 PC3 PC4 PC5 PC6 PC7 PC8

Standard deviation 1.4008 1.3598 1.0840 1.0114 0.87534 0.76901 0.58431 0.54010

Proportion of Variance 0.2453 0.2311 0.1469 0.1279 0.09578 0.07392 0.04268 0.03646

Cumulative Proportion 0.2453 0.4764 0.6233 0.7512 0.84694 0.92086 0.96354 1.00000

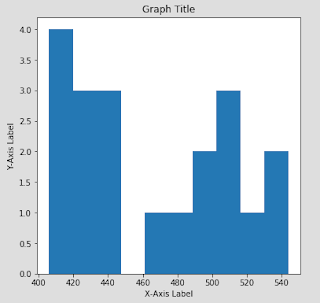

Graphical Console Output:

eigenvals <- pca_existing$sdev^2

# To view proportional eigenvalue output #

eigenvals/sum(eigenvals)

# To view the eigenvalues which were utilized to generate the above graph: #

eigenvals <- pca_existing$sdev^2

# To view proportional eigenvalue output #

eigenvals/sum(eigenvals)

# Re-load the data into the R platform #

# Be sure to change the ‘filepathway’ so that it matches the file location on your #

# computer #

DimensionReduct <- read.table("C:\\filepathway\\DimensionReduction.csv", fill = TRUE, header = TRUE, sep = "," )

# First, we must remove the ‘ID’ column from the data frame #

DimensionReduct0 <- DimensionReduct[c(-1)]

# Download and enable the package: ‘Psych’, in order to utilize the function: ‘principal’ #

# Download and enable the package: ‘GPArotation’, in order to utilize the #

# option: ‘rotate’ #

# The ‘nfactor’ option indicates the number of dimensional factors to utilize for analysis #

# The ‘rotate’ option indicates the type of rotation methodology which will be applied #

# The code below generates the principal components requested: #

pca <- principal(DimensionReduct0, nfactors=3, rotate = "quartimax")

# We must export these scores into a accessible format. The code below achieves such: #

pcascores <- data.frame(pca$scores)

# Prior to initiating the K-Nearest Neighbors process, we must isolate the previously #

# removed ‘ID’ #

# variable in order to have it later act as a classification factor. #

cl = DimensionReduct1[,1, drop = TRUE]

# We are now prepared to perform the primary analysis. #

# ‘k = 3’ indicates the number of components to utilize for analysis. #

# Download and enable the package: ‘class’, in order to utilize the function: ‘knn’ #

KNN <- knn(pcascores, pcascores, cl, k = 3)

# With the analysis completed, we must now assemble all of the outstanding #

# components into a single data frame. #

FinalFrame <- data.frame(DimensionReduct , pcascores)

FinalFrame$KNN <- KNN

This data frame should resemble the following:

# Create a 3D graphical representation for the K-Nearest Neighbor analysis #

scatter3D(FinalFrame$RC3, FinalFrame$RC2, FinalFrame$RC1, phi = 0, bty = "g", pch = 20, cex = 2)

# Create data labels for the graphic #

text3D(FinalFrame$RC3, FinalFrame$RC2, FinalFrame$RC1, labels = FinalFrame$ID,

add = TRUE, colkey = FALSE, cex = 1)

If you would prefer to have the data presented in a graphical format which is three dimensional and rotatable, you could utilize the following code:

# Enable 'rgl' #

# https://www.r-bloggers.com/turning-your-data-into-a-3d-chart/ #

plot3d(FinalFrame$RC3, FinalFrame$RC2, FinalFrame$RC1, col = blues9, size=10)

This creates a new window which should resemble the following:

text3D(FinalFrame$RC3, FinalFrame$RC2, FinalFrame$RC1, labels = FinalFrame$ID,

add = TRUE, colkey = FALSE, cex = 1)

If you would prefer to have the data presented in a graphical format which is three dimensional and rotatable, you could utilize the following code:

# Enable 'rgl' #

# https://www.r-bloggers.com/turning-your-data-into-a-3d-chart/ #

plot3d(FinalFrame$RC3, FinalFrame$RC2, FinalFrame$RC1, col = blues9, size=10)

This creates a new window which should resemble the following:

If you drag your mouse cursor across the graphic while holding the left mouse button, you can rotate the image display.

One final note un-related to our example demonstrations. If you are performing a nearest neighbor analysis, and your data has not been previously scaled, be sure to scale your data prior to proceeding with the procedure.